Scalecast Official Docs

The pratictioner’s forecasting library. Including automated model selection, model optimization, pipelines, visualization, and reporting.

$ pip install --upgrade scalecast

Forecasting in Python with minimal code.

import pandas as pd

from scalecast.Forecaster import Forecaster

from scalecast import GridGenerator

GridGenerator.get_example_grids() # example hyperparameter grids

data = pd.read_csv('data.csv')

f = Forecaster(

y = data['values'], # required

current_dates = data['date'], # required

future_dates = 24, # length of the forecast horizon

test_length = 0, # set a test set length or fraction to validate all models if desired

cis = False, # choose whether or not to evaluate confidence intervals for all models

metrics = ['rmse','mae','mape','r2'], # the metrics to evaluate when testing/tuning models

)

f.set_estimator('xgboost') # select an estimator

f.auto_Xvar_select() # find best look-back, trend, and seasonality for your series

f.cross_validate(k=3) # tune model hyperparams using time series cross validation

f.auto_forecast() # automatically forecast with the chosen Xvars and hyperparams

results = f.export(['lvl_fcsts','model_summaries']) # extract results

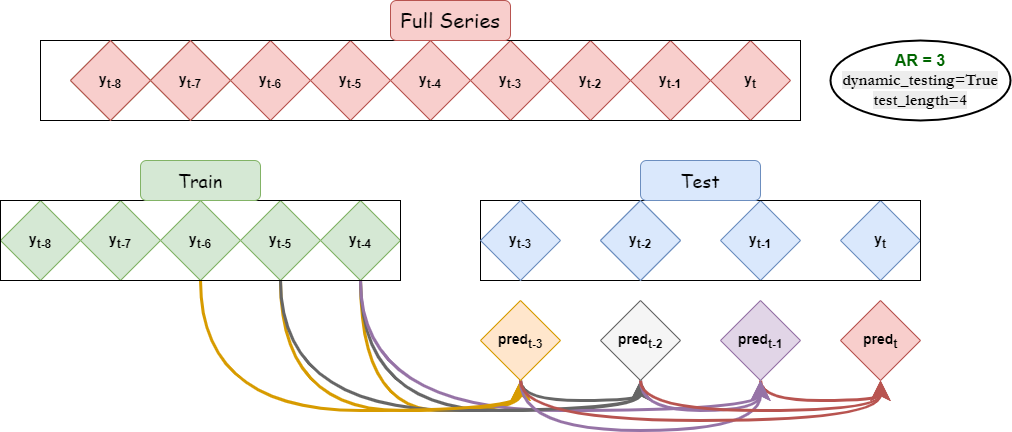

Dynamic Recursive Forecasting

Scalecast allows you to use a series’ lags (autoregressive, or AR, terms) as inputs by employing a dynamic recursive forecasting method to generate predictions and validate all models out-of-sample.

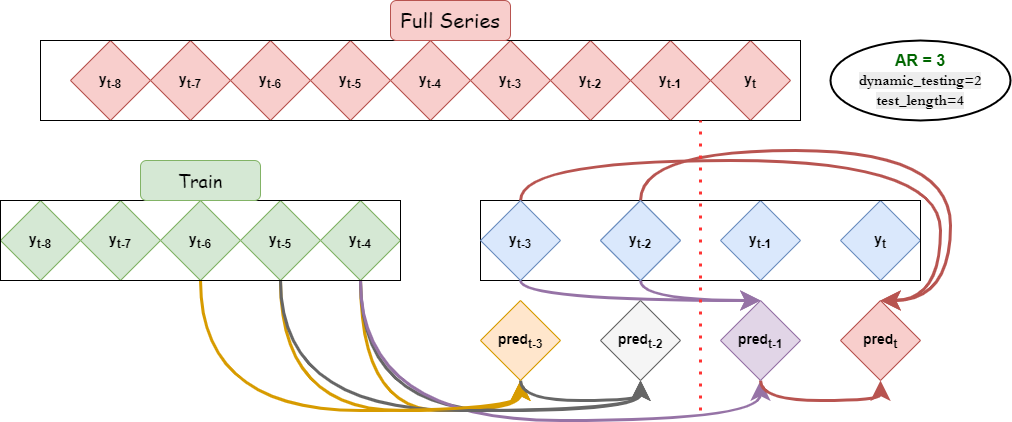

Dynamic Recursive Forecasting with Peeking

The recursive process can be tweaked to allow the model to “peek” at real values during the testing phase. You can choose after how many steps the model is allowed to do this by specifying dynamic_testing=<int>. The advantage here is that you can report your test-set metrics as an average of rolling smaller-step forecasts to glean a better idea about how well your algorithm can predict over a specific forecast horizon. You can also select models that are optimized on a given forecast horizon.

dynamic_testing=True is the default and dynamic_testing=1 or dynamic_testing=False means the test-set metrics are averages of one-step forecasts.

Links

- Readme

Overview, starter code, and installation.

- Introductory Notebook

A well-organized notebook that will quickly show you a lot of cool features.

- Examples

Other example notebooks.

- Forecaster and MVForecaster Attributes

Key terms that will make your life easier when reading documentation.

- Forecasting Different Model Types

What models are available?

- Changelog

Recent additions and bug fixes.